Scientists are now using AI to help humans understand what dogs are trying to communicate with their barks. When I wrote my new Policy Brief, “Artificial Intelligence Regulation Threatens Free Expression,” this wasn’t exactly the type of expression I had in mind but it nonetheless proves my point.

The fast-moving AI space is drawing so much attention and money because of its ability to radically change how we access information and communicate, apparently even with man’s best four-legged friends. Generative AI’s ability to create text, imagery, and audio in ways that mimic human intelligence and to do so quickly and at relatively low cost, is going to change the way we interact with each other and with the information in our world.

Given this massive expressive potential, we should be concerned about efforts to restrict the speech that AI can produce. Of course, there are some objectively dangerous types of speech that we may want to prevent AI from producing, like developing malware or instructions for making chemical weapons. But limiting AI speech because it isn’t diverse enough or some groups would find it offensive is not healthy for a culture of free expression.

Thankfully, the market is likely to respond to the demand for all sorts of speech and viewpoints by providing a host of personalized AI tools…unless the government gets in the way first. My new paper describes two ways that government regulation will harm free expression.

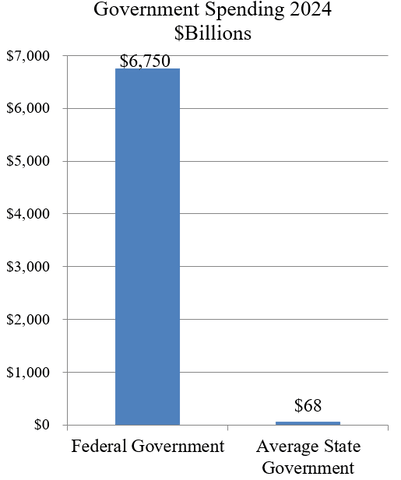

First, by generally making it harder for new companies to enter the market, suffocating regulations will cement the dominance of current big tech companies over AI. Consumers won’t get expanded choice and innovation that meets their needs and viewpoints.

Second, government regulation could explicitly target certain types of products or speech as “harmful.” When talking about potential AI harms, policymakers might be concerned about the impact of AI on jobs or the dystopian future of killer AIs like in Terminator or The Matrix. But another common harm that many elites and governments fear is the ability of AI to spread certain perspectives that are considered misinformation or hate speech. And so governments may also try to stifle the expressive potential of AI by restricting speech that they don’t like.

Instead of restrictions, regulations, and censorship, my paper proposes a light-touch approach to AI regulation. Existing rules and authorities are already being applied by government agencies and courts so this isn’t the Wild West. In some cases, like the EU, existing laws are so burdensome that they are already crippling innovation, indicating the need for fewer rules rather than more. Together with other forms of soft law, such as the development of social norms and best practices and improved AI literacy, policymakers can incentivize greater AI innovation and thus greater expression.

Whether you are using AI to better understand your dog or better communicate with your fellow Americans, the expressive possibilities are immense as long as we pursue an innovation-first approach to AI.